LoRA Fine-tuning & Hyperparameters Explained (in Plain English)

Low-Rank Adaptation (LoRA) method is a fine-tuning method introduced by a team of Microsoft researchers in 2021. Since then, it has become a very popular approach to fine-tuning large language models, diffusion models (such as for image-generation), and other types of AI models.

LoRA is a type of Parameter-efficient Fine-tuning (PEFT).

In this article, we’ll explain how LoRA works in plain English. You will gain an understanding of how it compares to full-parameter fine-tuning and what is going on behind the scenes. At the end, we'll introduce QLoRA and explain how the hyperparameters work.

TLDR: Watch the Video

Why LoRA?

The motivation behind LoRA is to get high-quality fine-tuning results more efficiently, since full-parameter fine-tuning requires a lot of memory, and other methods to-date are either impractical or make concessions in quality. High-performance GPUs are a precious resource, so more efficient methods can make fine-tuning more accessible and allow for greater levels of experimentation.

LoRA fine-tuning is indeed efficient, but it’s important to understand that it also yields high-quality results. With the right hyperparameters, LoRA can match the quality of full-parameter fine-tuning.

How Full-parameter Fine-tuning Works

Full-parameter fine-tuning is exactly what it sounds like—training all the parameters of a model. It’s continuing the original pre-training of the model, except fine-tuning is often “supervised” learning, meaning that the training data or examples come with “right” answers in the form of prompt/completion pairs.

To make sure terminology isn’t a roadblock to understanding, let’s go over the basics.

Parameters are also called weights, which are numbers used for the model’s internal calculations. We’ll use the terms parameters and weights interchangeably.

When you are fine-tuning, you are changing the weights of the model itself, training it to be better at your chosen task.

All the weights in a neural network are grouped into multiple layers or modules. Think of each layer as a collection of numbers that can be represented as a matrix. If your math is rusty, don’t let the word “matrix” scare you—it’s just a table of numbers.

These matrices are very large. For fine-tuning a 13B parameter model, there are 13 billion total weights to adjust, and you do this repeatedly. Whenever the model finishes processing a batch of samples (prompt/completion pairs), it calculates the adjustments using what is called a "loss function." This is basically the model trying to make its best guess for what the output should have been, then calculating how far off it was from the correct answer, and finally how much to adjust each weight up or down to get closer next time. That’s referred to as learning, training, or adaptation.

In order for a model to learn, the GPU has to store all these parameters in memory, plus data for intermediate math and steps.

How LoRA Works

LoRA does two fundamental things differently from full-parameter fine-tung:

Tracks changes to weights instead of updating weights directly.

Decomposes the large matrix of weight changes in two smaller matrices that contain the “trainable parameters.”

#2 is where the secret sauce is at. It sounds complicated, but it’s actually relatively simple.

It hinges on this concept: you can multiply two small matrices to get a larger matrix.

Take a look at this example that multiplies a 1x5 matrix by a 5x1 matrix to get a 5x5 matrix out:

You can do this process in reverse, too, starting with a matrix and trying to find two matrices that, when multiplied, get close in value to the original.

This is called matrix decomposition.

Since the two smaller, "decomposed" matrices are only one row or column deep, they have a rank of 1. We’ll explain what higher rank looks like in the next section.

The full 5x5 matrix above has 25 values in it, whereas if we count the values in the decomposed matrices, there are just 10 (5 + 5).

As the matrix we are trying to approximate gets larger and larger, we are working with a smaller and smaller proportion of values in our decomposed matrices, compared to the full-size matrix.

# Total Parameters | Full Matrix Dimensions | Parameters in Decomposed Matrices (Rank 1) | Relative Number of Values |

25 | 5x5 | 10 | 40% |

100 | 10x10 | 20 | 20% |

2.5k | 50x50 | 100 | 4% |

1M | 1k x 1k | 2k | 0.2% |

13B | 114k x 114k | 228k | 0.001% |

So, we can see that working with decomposed matrices reduces the amount of numbers involved significantly. On a GPU, that translates directly into less memory usage.

Now let’s apply this to fine-tuning models. In the context of LoRA, we’ll refer to these two smaller, decomposed matrices as the “change matrices,” because they track the changes we want to make to the model’s weights.

At the start of training, the change matrices are blank slates of all zeroes. Then, as we calculate the loss for a batch, they update to reflect the change in weights.

We store the model’s weights in memory as we go, but don’t update them directly. The LoRA paper refers to this as “freezing” the weights of the model. If we updated them as we went, we wouldn’t be able to save all that memory, so it’s a matter of using GPU resources efficiently.

It is trivial, at any point in time, to multiply our two change matrices together into a matrix the same size as the layer in the model, representing the change in weights we want to apply to our model.

Then, we simply add the multiplied change matrix to the model’s original matrix for the layers we’re tuning to get a shiny new fine-tuned model. Alternatively, this can be done on-the-fly at inference time with an "adapter," depending on what is more efficient for the use case.

According to the LoRA paper, the net effect of the LoRA method is a 3x savings in memory usage, and in some cases, higher throughput (faster training):

LoRA makes training more efficient and lowers the hardware barrier to entry by up to 3 times when using adaptive optimizers since we do not need to calculate the gradients or maintain the optimizer states for most parameters. Instead, we only optimize the injected, much smaller low-rank matrices.

Their results were comparable in quality to full-parameter fine-tuning:

LoRA performs on-par or better than finetuning in model quality on RoBERTa, DeBERTa, GPT-2, and GPT-3, despite having fewer trainable parameters, a higher training throughput, and, unlike adapters, no additional inference latency.

But, there is a catch.

You have to choose the right rank for your task.

Matrix decomposition of rank 1 makes a pretty big concession in precision.

Multiplying two smaller matrices doesn’t give us the same results as would tuning the parameters directly, because the resulting table from multiplying the two small matrices is only an approximation.

LoRA does ultimately adjust all the parameters of the model, just not as precisely when the rank is low.

For most tasks, a low rank is probably fine.

The theory for why is explained in the paper like this:

...learned over-parametrized models in fact reside on a low intrinsic dimension. We hypothesize that the change in weights during model adaptation also has a low “intrinsic rank,” leading to our proposed Low-Rank Adaptation (LoRA) approach.

"Over-parameterized" means that these models are really bigger than they need to be for the training data, and not all of the parameters strictly matter. There is redundancy, robustness, and resiliency within the parameters.

Also, if you have a massive model that performs well at zero-shot learning on a wide variety of tasks, it’s basically super smart, powerful, and capable. Now if you want to improve performance or fine-tune it to only do something specific that is a subset of what it could do before through prompt engineering (a “downstream task”), you don’t need a lot of precision to align it to that narrower task.

There are also a lot of other examples in machine learning where using low-rank approximations leads to good outcomes, so this theory builds on prior work in machine learning, per the paper:

Low-rank structure is very common in machine learning. A lot of machine learning problems have certain intrinsic low-rank structure (Li et al., 2016; Cai et al., 2010; Li et al., 2018b; Grasedyck et al., 2013). Moreover, it is known that for many deep learning tasks, especially those with a heavily over-parametrized neural network, the learned neural network will enjoy low-rank properties after training (Oymak et al., 2019). Some prior works even explicitly impose the low-rank constraint when training the original neural network (Sainath et al., 2013; Povey et al., 2018; Zhang et al., 2014; Jaderberg et al., 2014; Zhao et al., 2016; Khodak et al., 2021; Denil et al., 2014);

Basically, you can get away with very rough updates. Because the model is so massive, it’s not particularly sensitive to any given parameters being off by a lot, or a little. Its overall size and complexity make up for it.

There is an exception to this, which is when your task is very complex or you need to shift the model to do something that’s more out-of-scope from its original training.

We can most likely still use LoRA, but we need to increase the rank.

Increasing the Precision of LoRA with Rank

If we want our LoRA method to be more precise, we can increase the rank of our change matrices. We still get an output matrix the same size as the layer we’re training in the model, but we encode more information in each change matrix so that when they are multiplied, the resulting numbers are more precise.

See how the input matrices are larger now? They’re now 2 columns and 2 rows deep, respectively.

Now, in this case, we’re training 20 parameters (10 + 10) to get a matrix that is only 25 parameters large. Naturally, it will be a lot more precise, but the difference between the overall number of parameters has become quite small (25 - 20 = 5), which is just because we’re working with small examples.

Here is a chart that shows how rank determines the number of trainable parameters for different model sizes, assuming they were all in one big matrix.

Rank | 7B | 13B | 70B | 180B |

1 | 167,332 | 228,035 | 529,150 | 848,528 |

2 | 334,664 | 456,070 | 1,058,301 | 1,697,056 |

4 | 669,328 | 912,140 | 2,116,601 | 3,394,113 |

8 | 1,338,656 | 1,824,281 | 4,233,202 | 6,788,225 |

16 | 2,677,312 | 3,648,561 | 8,466,404 | 13,576,450 |

512 | 85,673,987 | 116,753,964 | 270,924,934 | 434,446,406 |

1,024 | 171,347,973 | 233,507,927 | 541,849,869 | 868,892,813 |

8,192 | 1,370,783,787 | 1,868,063,416 | 4,334,798,948 | 6,951,142,502 |

16,384 | 2,741,567,575 | 3,736,126,833 | 8,669,597,896 | 13,902,285,004 |

Now, here is the same chart showing the LoRA trainable parameters as a percentage of the overall total model parameters:

Rank | 7B | 13B | 70B | 180B |

1 | 0.002% | 0.002% | 0.001% | 0.000% |

2 | 0.005% | 0.004% | 0.002% | 0.001% |

4 | 0.010% | 0.007% | 0.003% | 0.002% |

8 | 0.019% | 0.014% | 0.006% | 0.004% |

16 | 0.038% | 0.028% | 0.012% | 0.008% |

512 | 1.224% | 0.898% | 0.387% | 0.241% |

1,024 | 2.448% | 1.796% | 0.774% | 0.483% |

8,192 | 19.583% | 14.370% | 6.193% | 3.862% |

16,384 | 39.165% | 28.739% | 12.385% | 7.723% |

Note: Rank doesn’t have to be a multiple of 2, but if you want to test a lot of different ranks, doubling it will yield more meaningful results than marginal changes.

As you can see, as model size increases, the efficiency gains from LoRA training increase.

Also, you can use fairly high values for rank and still work with many fewer parameters than in full-parameter fine-tuning. The actual upper limit for rank depends on the size of the layers being tuned in the model.

As you increase the rank of the change matrices, the resulting LoRA weight change matrix has more precision in each weight that can be ultimately applied to the original weights.

This is sometimes expressed as “percentage of trainable parameters,” which is easy to misinterpret, because it may seem to imply that we’re not training all the parameters in the model.

With LoRA, you are always training the full parameters of each layer that it’s configured to train.

However, you are tracking these changes in the two smaller matrices, which are the “trainable parameters.”

If you simply divide the number of values you’re tracking in those matrices by the total parameters in the layer, it’s a very small percentage.

But we’re not ignoring any of the layer’s parameters when we ultimately apply our changes.

They all get updated, just less precisely.

What is the right LoRA Rank?

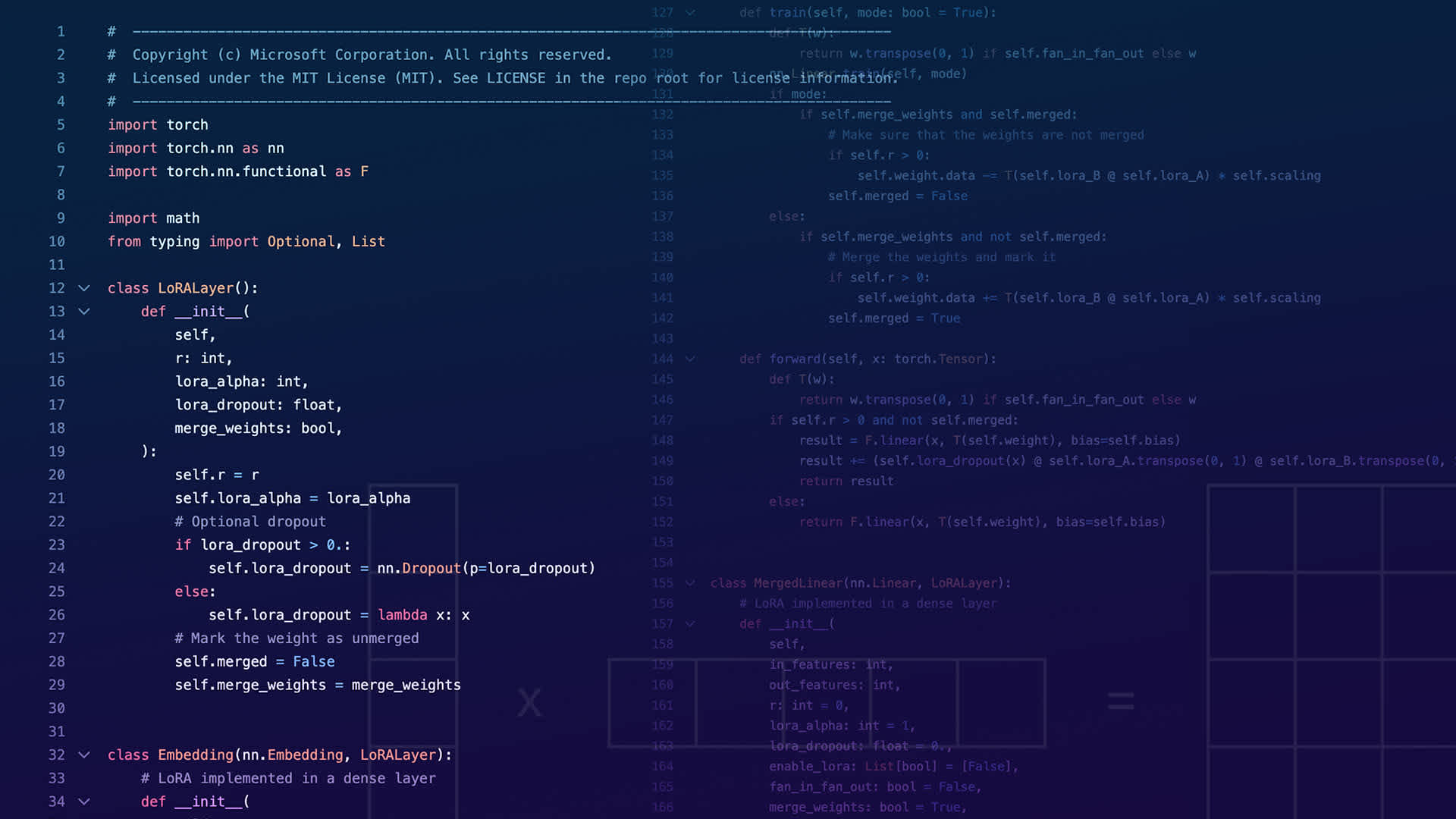

In the Microsoft LoRA repository, which is the implementation they released in 2021 with the paper, their examples used ranks of 8 or 16.

According to Mark Huang from Gradient, you should use a rank of 32 or higher.

However, in the more recent QLoRA paper, they introduce a quantized version of LoRA which we'll discuss next, but they also did a lot of experimentation to show that there is very little statistical difference between ranks of 8 and 256.

We find LoRA r is unrelated to final performance if LoRA is used on all layers...

So if you're rank is 8 or above, it simply may not matter.

Perhaps this could change as different models come out that use weights and parameters more efficiently to compress information.

For example, Mystral 7B can compete with Llama 13B. Does that mean that the weights in Mystral 7B contain more information, that the model is therefore intrinsically higher rank, and requires more precision for LoRA fine-tuning?

I don't know the answer, but I'll be interested to find out.

QLoRA is LoRA 2.0

When I first heard of QLoRA, I assumed that it meant quantizing the model and accepting lower quality outputs.

In fact, the QLoRA team created a special datatype called a NormalFloat that allows a normal distribution of weights to be compressed from 16-bit floats into 4-bits and then restored back at the end with minimal loss in accuracy.

If your model uses 16-bit floats and you can store that as 4-bit floats, you've effectively reduced your memory footprint by 4x. That's huge. They made other optimizations as well.

But importantly, they achieved results on-par with full-parameter fine-tuning too. The precision of the 4-bit NormalFloats is retained after training and converting back into 16-bit floats with no meaningful loss in quality. In other words, your final weights are 16-bit float precision.

QLoRA should be considered an holistic upgrade to LoRA that allows high-quality fine-tuning of even larger models on smaller GPUs than ever before.

LoRA Hyperparameters Besides Rank

In addition to the most common fine-tuning parameters like number of epochs and learning rate, LoRA has the following hyperparameters:

Layers

According to the QLoRA paper, the most important thing you can do to make LoRA fine-tuning effective is to train all layers of the network. Then, and only then, were they able to achieve the quality of full-parameter fine-tuning.

As shown in Figure 2 for LLaMA 7B finetuning on Alpaca, we find that the most critical LoRA hyperparameter is how many LoRA adapters are used in total and that LoRA on all linear transformer block layers are required to match full finetuning performance. Other LoRA hyperparameters, such as the projection dimension r, do not affect performance (see Appendix A).

Alpha

When the weight changes are added back into the original model weights, they are multiplied by a scaling factor that’s calculated as alpha divided by rank.

In the LoRA codebase, Microsoft sets alpha to 2x the rank in all their examples, meaning that the weight changes are doubled when added. Following their practices, if your rank is 8, start with 16 for alpha. If your rank is 16, start with 32.

In the QLoRA paper, they used alpha values that were 50% and 25% of rank, and achieved excellent results.

Decreasing alpha relative to rank increases the effect of fine-tuning. Increasing alpha relative to rank decreases it. If you set alpha to the same as rank, then you’re adding the weight changes as they were determined during training (multiplied by 1x), which makes the most sense to me.

Apparently, you can think of learning rate and alpha as somewhat redundant, so if you're tweaking one you can leave the other constant.

On a side note, it’s unclear to me why implementations of LoRA don't just have scale factor be the hyperparameter, which would be a lot more intuitive to work with.

Dropout

This is the probability that a trainable parameter will be artificially set to zero for a given batch of training. It’s used to help prevent overfitting the model to your data.

The QLoRA paper set dropout to 0.1 or 10% for fine-tuning 7B and 13B models, and reduced it to 0.05 or 5% for 33B and 65B models.

Wrapping Up

In conclusion, LoRA and especially QLoRA allow us to fine-tune models more efficiently, and compares in quality to full-parameter fine-tuning when you train all the layers of the model.

There is no added overhead in the end when you need to deploy your model, because the weights can simply be added together to create a new fine-tuned model with the same architecture as the original model.

We hope this article has helped explain LoRA fine-tuning in a practical way! If you want to try LoRA in practice, Entry Point is our fine-tuning platform that has integrations with LLM providers that offer it.